Project Postmortem

Retrospecting

Let’s discuss something that went well and something that didn’t go as well.

Our team cut scope very well when we needed to. This happened at a crucial time in the project; it was the final iteration and we wanted to build one of our most important features, Add Music. JumpyTunes could view and play music, including using a Song List that could manage repeats and shuffling playback, but in order to be useful as a general-purpose music player we needed to let the user bring their own music. However, we ran into a problem.

Adding music was taking too long to build. There was a lot to do: we needed to parse the .wav file and extract the metadata, allow the user to edit the metadata, and save the info in the database. We also needed to manage the .wav file itself: our best idea was to copy the file into a folder owned by JumpyTunes and keep this folder in sync with the database. We quickly found that this was too much to handle in iteration 3 if we also wanted to address our tech debt and complete any other features. We looked at the project requirements and, with Lauren’s advice, we decided to cut down the feature. We settled on a simpler prototype that would still show off our data persistence, but without the tricky file management. We abandoned our in-progress file parsing and our ideas for managing .wav files, and we created an input form that let the user input a “song” that was really just the metadata for a song. The “song” made by the user would simply play one of the sample tracks we included with the app.

This scope cutting allowed us to complete the Add Song feature on time. It successfully demonstrated our data persistence and allowed us to show off some validation logic. We were sad to lose the functionality, but the whole team made the decision together and we agreed it was the best course of action.

Throughout the project, there was one point that consistently came up in our retrospectives. Every iteration, we did most of the work in the last few days before the deadline, and we struggled to get things done early Our features were getting done on Wednesday morning instead of Monday or Tuesday like we planned. This meant we had no time to refactor or improve our existing code, and we had to focus on getting features out instead of cleaning up tech debt. The result was that our tech debt kept growing and our development pace was hard to sustain.

This time management problem was tricky to solve. Before each deadline, we all put off our other responsibilities to make sure the release went well, but then we had to catch up on the rest of our lives before we could dive in again. This usually took until Friday or Saturday, and then we only had the weekend and the beginning of the next week to get something out for the next iteration. Our first late start caused a feedback loop that kept us running up against the deadline.

In Iteration 3 we finally got some breathing room. We were still slow to start, but the longer iteration cycle (two weeks instead of one) meant we had more time to focus on paying down tech debt and getting features done early. We took our chance to get out of the negative cycle and we finished feature development on Monday and Tuesday instead of Wednesday morning. We also prioritized paying down tech debt throughout the whole iteration instead of leaving it until the features were done. Ultimately iteration 3 went much better for us, but without the longer timeline we would likely have stayed on our rushed rhythm.

By The Numbers

Here are some statistics about our project, along with some commentary.

- 185 commits

- 116 merge requests

- 54 issues created

The total number of commits reported by GitLab seems low. Did we really only commit once or twice for each merge request on average? We squashed commits in our dev branch when we merged them, so that may have lowered the total number of commits.

Let’s look at the number of files and methods in the project:

| Package | Number of Files | Number of Methods |

|---|---|---|

| Presentation | 9 | 45 |

| Logic | 11 | 63 |

| Persistence | 12 | 60 |

| Enums | 3 | 0 |

| Exceptions | 15 | 0 |

| Objects | 5 | 31 |

Total (incl. App.java) |

56 | 200 |

By count of methods, our Logic layer was split roughly into thirds. There were 20 methods relating to audio playback, and 22 methods relating to the playback queue (including up next, shuffle, and repeat). The remaining 21 methods covered validation, playlist management, and adding songs. Of course, this doesn’t take into account the complexity of each method.

Now let’s look at the number of tests:

| Package | Number of Test Files | Number of Test Methods |

|---|---|---|

| Logic | 11 | 113 |

| Persistence | 4 | 20 |

| Integration | 3 | 34 |

| Acceptance | 7 | 26 |

| Total | 25 | 193 |

Naturally, the Logic layer had by far the highest number of tests. However, we also achieved a sizeable amount of integration and acceptance tests, especially considering that each method in those packages is much larger than the unit tests covering Logic.

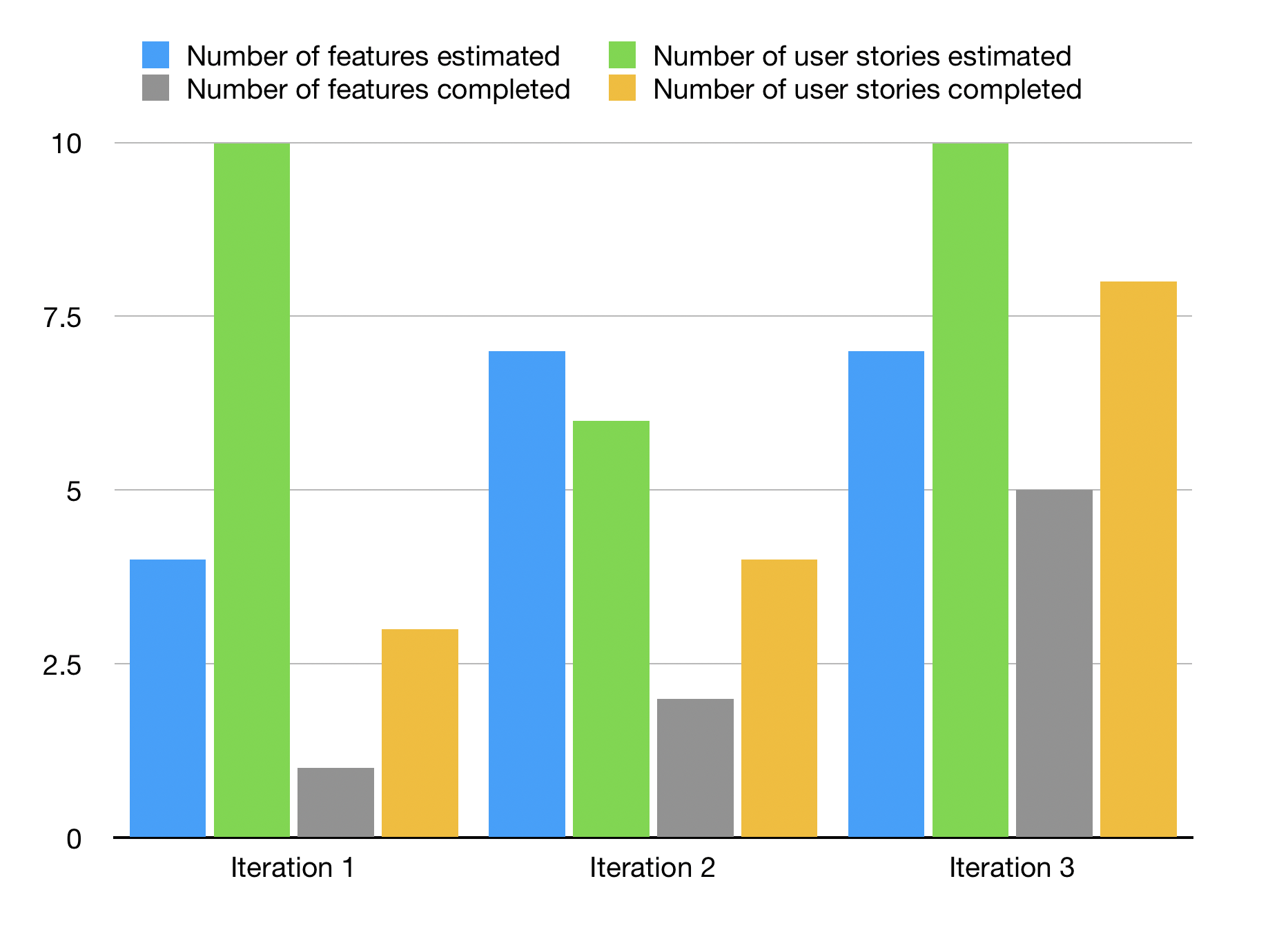

And finally, our velocity charts:

During iterations 2 and 3 we became much more studious about tracking time. This graph probably shows our increased attention on tracking as well as our increase in time spent.